In the realm of machine learning, data serves as the fundamental building block upon which algorithms learn and make precise predictions. Nonetheless, raw data usually lacks the essential context required for machines to grasp its meaning. This is where annotation, the intricate process of labeling and tagging data, emerges as a pivotal catalyst. Annotation seamlessly bridges the gap between human comprehension and machine understanding, enabling algorithms to learn patterns, make accurate predictions, and drive innovation across diverse industries.

The Indispensable Role of Annotation in Advancing Machine Learning:

Annotation serves a pivotal role in the realm of machine learning, influencing the entire process through several fundamental aspects:

- Training Data Preparation: Annotated data serves as the training ground for machine learning models. By providing labeled examples, annotation empowers algorithms to identify and learn patterns, correlations, and features necessary for accurate predictions.

- Enhancing Model Accuracy: Accurate and comprehensive annotations contribute to improved model accuracy. By labeling data with relevant information, such as object boundaries, semantic classes, or sentiment, annotators provide the necessary guidance for algorithms to make precise predictions.

- Fine-grained Understanding: Annotation enables machines to perceive and comprehend complex data. By annotating various aspects such as objects, relationships, sentiment, or intent, models gain a deeper understanding of the data, enabling them to make nuanced and context-aware predictions.

- Domain Adaptation: Annotation facilitates the adaptation of machine learning models to particular domains. By labeling data specific to a particular field, such as medical images, autonomous driving scenarios, or customer sentiment in e-commerce, models can specialize in making accurate predictions within those domains.

Exploring Various Techniques of Image Annotation in Machine Learning

Image annotation is a prevalent form of annotation and encompasses several techniques that play a vital role in training machine learning models for various tasks, let’s understand each technique:

- Image Classification: The most basic yet powerful form of annotation that just involves the segregation of images based on different classes. These groups of segregated images are needed when training image classification types of machine learning models.

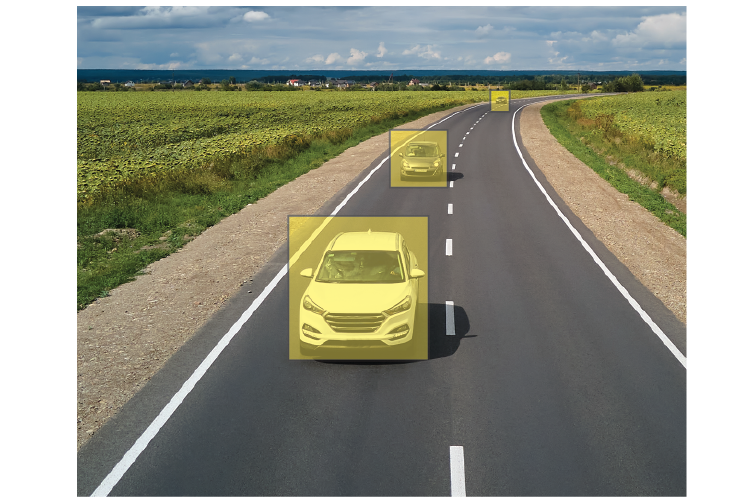

- Bounding Box Annotation: This includes forming a bounding box encapsulating the region of interest inside its bounds. This helps in accurately knowing the object’s coordinates which can be further used to deduce the object’s location and size Bounding box annotation finds frequent application in tasks involving object detection.

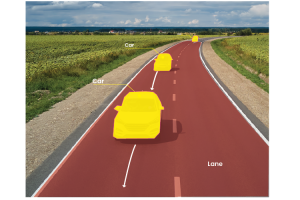

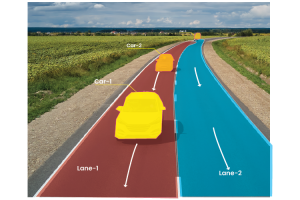

- Semantic Segmentation: In semantic segmentation, annotators assign class labels to each pixel in an image, allowing models to understand and differentiate between various objects or regions. This method offers an intricate comprehension of visual composition.

- Instance Segmentation: Much like semantic segmentation, instance segmentation encompasses the labeling of individual pixels associated with an object, while also distinguishing among multiple occurrences or instances of the same class. This technique is advantageous when there are overlapping objects in an image.

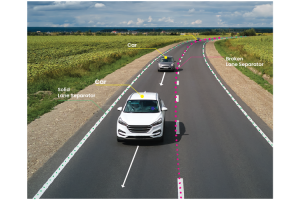

- Polygon Annotation: This technique is used for annotating objects with irregular shapes, allowing annotators to draw precise boundaries using polygons. Polygon annotation is commonly employed for segmenting objects like roads, buildings, or natural landscapes.

- Landmark Annotation: Landmark annotation involves marking specific points or landmarks on an object, enabling models to understand and track spatial features accurately. It is commonly used in applications like human pose estimation, facial recognition and medical imaging.

Innovative Approaches for Annotated Data Generation in Dataset Preparation

Generating high-quality annotated data is a pivotal stride within the machine-learning pipeline. Here are several strategic approaches to generating annotated data:

- Manual Annotation: Manual annotation involves human annotators carefully labeling and tagging data based on predefined guidelines. This approach requires domain expertise and can be time-consuming and labor-intensive. However, manual annotation allows for precise and accurate annotations, especially when dealing with complex or subjective tasks.

- Semi-Supervised Learning: In semi-supervised learning, a small portion of the data is manually annotated, and then the model is trained to predict annotations for the remaining unlabeled data. This approach combines the benefits of manual annotation with the efficiency of automated annotation, as the model can generalize the annotations to new examples. Techniques such as active learning and self-training can be employed to iteratively improve the model’s performance.

- Transfer Learning and Pre-trained Models: Another approach is to leverage pre-existing annotated datasets or pre-trained models. Transfer learning allows for the transfer of knowledge from a model trained on a large annotated dataset to a new task with limited annotated data. When we adjust the pre-trained model using the new task’s labeled data, it can rapidly learn the new task better and also improvise the prediction accuracy.

- Synthetic Data Generation: In some cases, it may be challenging or costly to obtain large amounts of annotated real-world data. In such situations, synthetic data generation techniques can be used. Synthetic data is artificially created, either through computer-generated imagery or data augmentation techniques. The annotations can be generated simultaneously with the synthetic data creation, providing labeled examples for training machine learning models.

Factors to be Considered for Image Annotation:

Ensuring effective image annotation involves a careful balance of several critical factors that contribute to both the accuracy and quantity of annotated data. Let’s delve into the pivotal elements that shape the process:

- Quality: Accurate, consistent, and reliable annotations are crucial for training reliable machine learning models. High-quality annotations ensure that models learn from reliable ground truth, minimizing the risk of biased or inaccurate predictions. Quality control measures, such as multiple annotator consensus or expert validation, can be employed to ensure annotation accuracy.

- Quantity: You surely need a good amount of labeled data to train an accurate machine-learning model. A larger dataset allows models to learn a broader range of patterns, improving generalization and performance. However, while aiming for quantity one should not overlook quality. Balancing the trade-off between quality and quantity is essential, ensuring a diverse and representative dataset.

- Domain Expertise: Domain expertise among annotators is crucial for understanding the context and annotating data accurately. Experts who possess deep knowledge of the domain can provide more nuanced annotations, improving model performance.

- Annotation Guidelines: Having clear and precisely outlined annotation guidelines is crucial to keep annotations consistent. These guidelines should cover unclear scenarios, possible tricky situations, and any unique project needs. Detailed instructions on object boundaries, label definitions, and annotation conventions ensure uniformity and minimize ambiguity.

- Iterative Feedback: Feedback loops between annotators, data scientists, and domain experts help refine annotations over time. Regular communication and feedback sessions ensure the continuous improvement of annotation quality and prevent potential issues. Iterative feedback helps address challenges, clarify guidelines, and resolve uncertainties.

Annotation forms the foundation for machine learning, enabling algorithms to make sense of complex and unstructured data. Through the process of labeling and tagging, annotation empowers models to learn patterns, adapt to specific domains, and make accurate predictions. The quality and quantity of annotations are crucial for developing reliable models that can address real-world challenges. By harnessing the power of annotation, VOLANSYS has the capability to unlock the true potential of machine learning, revolutionizing industries and shaping the future of technology.

Tools have consistently been an integral aspect of the annotation process. The selection of the appropriate tool also indirectly enhances the efficiency of the entire data annotation pipeline. At VOLANSYS, we excel in utilizing industry-acclaimed tools like CVAT (Computer Vision Annotation Tool), VIA (VGG Image Annotator), Makesense.ai, and LabelImg.

Uncover the VOLANSYS’ impactful work on annotation through these compelling use cases:

Case Study 1: The precision of a machine learning model hinges on the data it’s trained with. At VOLANSYS, we attained unprecedented accuracy in a novel realm of vision-based machine learning solutions. This success can be largely attributed to the superior quality and quantity of annotated data we generated internally. Check out the complete case study showcasing our involvement in the annotation aspect of the electrical structure monitoring solution.

Case Study 2: In the healthcare domain, the prevention of false negatives is of paramount importance to mitigate potential fatalities. At VOLANSYS, we helped a US-based medical/healthcare solution provider to develop a critical computer vision-based remote patient monitoring solution. This advanced system is designed to detect instances such as patient falls or attempts to move from a minimally conscious state. Our work encompassed handling intricate and sometimes even illegible scenarios, yet we were able to achieve a first-in-class level of accuracy. This achievement was made possible by meticulously attending to intricate details during the annotation process.

To know more about our Machine Learning expertise, feel free to contact us.

About the Author: Kandarp Rastey

Kandarp is a seasoned senior engineer who has been an integral part of VOLANSYS, with a specialization in AI and ML technologies within the realm of embedded firmware. His primary focus lies in the creation, training, and optimization of AI/ML models. Additionally, he has made noteworthy contributions to the development of diverse Linux-based embedded products. His proficiency extends across wireless protocols and the IoT automation landscape. Endowed with certifications from reputed organizations, he embodies an agile tech enthusiast. Kandarp is notably driven by a fervent dedication to advancing vision-based ML and AI concepts.